This post provides some insights about Cluster API (CAPI), a Kubernetes Lifecycle SIG project and how to use it together with Cluster API Provider for AWS (CAPA) on any Kubernetes Cluster to create a single or a fleet of Vanilla Kubernetes cluster(s) on AWS or other supported providers.

Cluster API is very new and was inspired by Kubicorn and Kops projects and I guess some concepts was looked up by Rancher’s way of bootstrapping clusters.

At this time of writing the Cluster API project has reached the v1alpha3 phase and I think with the right skills and backup and recovery strategy one can trust the project and use it in production as well.

In general on AWS I’d recommend to go with EKS with managed node, spot instances and auto-scaling support or in some cases even with the Serverless Kubernetes on Fargate solution, if you can live with the current Kubernetes 1.14.x supported version.

If you need to use the most recent K8s versions 1.16.x or soon the 1.17.x version for production on AWS, you might want to go with Rancher and Rancher’s Kubernetes Engine.

In my next post I’ll introduce our own Cattle AWS and Cattle EKS implementations with the tk8ctl tool and show you how we define and deploy production ready clusters in a declarative way with a single YAML file.

Bootstrapping Kubernetes from within Kubernetes is not something new, Rancher is doing that for a long time, since Rancher is running on an embedded K3s cluster and is using CRDs to bootstrap any Kubernetes Cluster on any Provider.

Well, let’s stay by CAPI+CAPA and don’t dive into any religious discussions 😉 and see how innovation works and what is going to come soon in the CAPI space.

The Cluster API Book and Related Resources

The Cluster API Book and the related resources which are linked through this article provide mostly everything which one needs to know about Cluster API and how to get started.

The main scope and objectives of Cluster API is to provide a set of Kubernetes cluster management APIs and Kubernetes Operators to enable common cluster lifecycle operations like create, scale, upgrade, and destroy of Kubernetes Clusters across infrastructure providers including on-prem environments with bare-metal support.

In short, Cluster API (a.k.a CAPI) extends the base Kubernetes API to understand concepts such as Clusters, Machines, and Machine Deployments, as Chris Milsted describes very nicely in his blog post with the title Taking Kubernetes to the People.

With Cluster API, Kubernetes Clusters, Machines and Machine Deployments become the first class citizens on top of core Kubernetes API to build, deploy and manage the lifecycle of other Kubernetes clusters from within a Kubernetes cluster like minikube or a serverless EKS cluster on fargate or any other Kubernetes cluster.

With that said, let’s learn about CAPI’s API and Framework and how to get started on AWS and later on Bare-Metal or other providers.

CAPI’s API and Operator Framework

Cluster API provides a framework through Kubernetes Operators and a set of APIs defined through Custom Resources Definitions (CRDs) to declaratively specify a Cluster spec including a Machine controlled by a MachineSet and a MachineDeployment through an infrastructure specific MachineTemplate, e.g. the AWSMachineTemplate.

The Cluster CRD

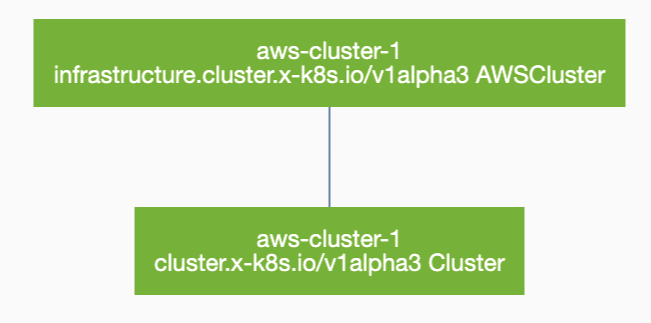

The CAPI defines a Cluster through a simple Cluster controller definition defined by a Cluster CRD and in our case with an infrastructure specific AWSCluster CRD, which will take over the control of our clusters from within an external K8s cluster to build and manage the lifecycle of a target K8s cluster.

The Cluster and AWSCluster CRDs are defined in the cluster.yaml, here we define the name of our cluster, which is aws-cluster-1, the cluster Pod Network cidrBlocks, the region where we want to deploy our cluster and finally the SSH Key in AWS. We will see later after applying the cluster.yaml a small micro bastion host (Ubuntu 18.04) will be provisioned a new VPC on AWS with a public IP which provides access to the master and worker nodes.

The graphical presentation of the Cluster CRD objects can be represented like this (we’ll see later how to create it by using octant):

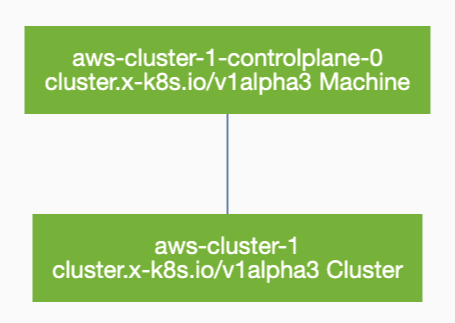

The Control Plane

The Control Plane consists of a Machine CRD resource controlled by a MachineSet (think of it like a ReplicaSet) which defines a set or group of machines, these are the Control Plane (Master + ETCD) nodes. Through the controlplane.yaml we define one Control Plane, the K8s version (1.16.2), the bootstrapper (KubeadmConfig) and our infrastructure specific AWSMachine CRD which is presented like this:

The graphical presentation of the ControlPlane CRD objects can be represented like this:

The MachineDeployment CRD

The MachineDeployment represents the deployment of a set of worker nodes defined through the spec.replicas filed within a nodepool-0 here. Think about a MachineDeployment like a standard Deployment object in Kubernetes which takes care about the scheduled pods, that said, the MachineDeployment takes care about the scheduled Machines (our worker nodes).

The YAML presentation of the MachineDeployment CRD has a reference to our infrastructure specific AWSMachineTemplate and the KubeadmConfigTemplate:

Deploy K8s using K8s with Cluster API

As mentioned earlier we need first a Kubernetes Cluster running, either on our local machine or somewhere else. This first Kubernetes cluster will be our bootstrap / management cluster, where we are going to deploy the Cluster API on top and bootstrap a target worker cluster named aws-cluster-1 on AWS.

For the initial bootstrap cluster on our machine, which is going to become our management cluster, we can use Kind, k3d, my own kubeadm-multipass implementation or other solutions over there, you have the freedom of choice.

By the way I tested the whole thing with this nice EKS on ARM implementation by Michale Hausenblas, it worked like a charm.

And if you want to try this nice implementation by Dhiman Halder with Kubeadm with Spot Instances support for 6$ / month to run your management cluster, please let me know if it worked.

Let’s get started

Prerequisites

A Kubernetes cluster (> 1.15 due to cert-manager support?)

Linux or MacOS (Windows is not supported yet)

git cli

make (optional and nice to have)

Homebrew for Linux of MacOS

AWS CLI

jq

kustomize

clusterawsadm

As per Cluster API Provider AWS requirements in order to use clusterawsadm you must have an administrative user in an AWS account. Once you have that administrator user you need to set your environment variables:

- AWS_REGION

- AWS_ACCESS_KEY_ID

- AWS_SECRET_ACCESS_KEY

- AWS_SESSION_TOKEN (if you are using Multi-factor authentication)

CAPA: Cluster API Provider for AWS

The Core Cluster API (CAPI) project provides kubeadm as the default bootstrap provider named Cluster API Bootstrap Provider Kubeadm (CABPK) and there are infrastructure specific providers like Cluster API Provider for AWS (CAPA) which is developed out-of-tree of the core project and extends the CAPI for their own environment, which we’re going to use now.

In the following steps we’re going to clone the source of the Cluster API Provider for AWS from Github, install kustomize (if not present on your machine), build the clusterawsadm binary with make, do some minor changes to the generate.sh script to create and apply a couple of manifests and turn our local bootstrap cluster to a management cluster which deploys and manages our first cluster aws-cluster-1.

Get the source

git clone https://github.com/kubernetes-sigs/cluster-api-provider-aws.git

Install kustomize

brew install kustomize # if not already installed

Build the binaries with make

cd cluster-api-provider-aws/

make binaries

# this will build the clusterawsadm and manager binaries and place it under the bin directory, alternatively you can download clusterawsadm form the release page and place it in the bin directory, since the generate.sh script expects to have the clusterawsadm in the bin directory

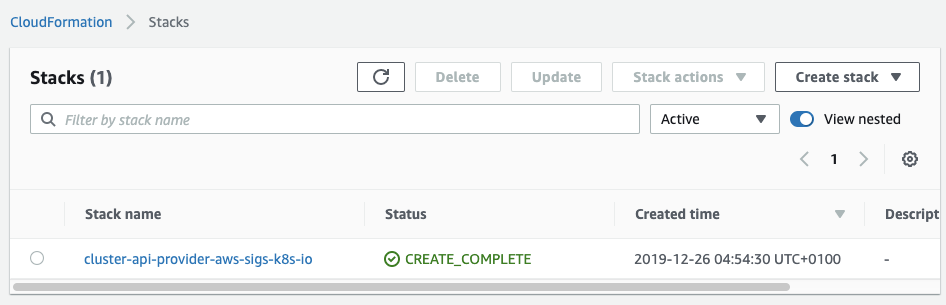

Create the CAPA CloudFormation Stack

# import your public key-pair

aws ec2 import-key-pair --key-name default --public-key-material "$(cat ~/.ssh/id_rsa.pub)"

# set the region

export AWS_REGION=eu-central-1

./bin/clusterawsadm alpha bootstrap create-stack

The clusterawsadm command above will create a CloudFormation Stack named cluster-api-provider-aws-sigs-k8s-io which you shall see in the AWS Console with the status “Create Complete” before proceeding with the next steps:

Adapt the generate.sh script

You’ll find the generate.sh script under the examples folder of the repo, I’ve changed the cluster name and Kubernetes version to 1.16.2 like this:

# Cluster

export CLUSTER_NAME=”${CLUSTER_NAME:-aws-cluster-1}”

export KUBERNETES_VERSION=”${KUBERNETES_VERSION:-v1.16.2}”

With all that in place we can now generate the needed manifests which will be placed under the examples/_out directory.

We can now run the following commands to turn our bootstrap cluster into the management cluster and deploy an HA’ed worker cluster with 3 Control Plane and 2 worker nodes:

Cost notice

You’l make Jeff Bezos happy with approx. $1 for one hour playing with this tutorial. If you’d like to have only 1 master and 1 worker node, you can replace the generated files with those files embedded above from my gist.

./examples/generate.sh

kubectl create -f examples/_out/cert-manager.yaml

kubectl get all -n cert-manager

# wait for cert-manager to get ready before creating the provider-components

kubectl create -f examples/_out/provider-components.yaml

# create the Cluster CRD, this will create the bastion host in a new VPC

kubectl create -f examples/_out/cluster.yaml

# create the Control Plane (3 stacked master + etcd nodes)

kubectl create -f examples/_out/controlplane.yaml

# Create 2 worker nodes

kubectl create -f examples/_out/machinedeployment.yaml

# wait until the machines are ready and create the kubeconfig file

kubectl --namespace=default get secret aws-cluster-1-kubeconfig -o json | jq -r .data.value | base64 --decode > ./aws-cluster-1-kubeconfig

# create the CNI (Calico)

kubectl --kubeconfig=./aws-cluster-1-kubeconfig apply -f https://docs.projectcalico.org/v3.8/manifests/calico.yaml

# or create CNI for Calico with the addon

kubectl --kubeconfig=./aws-cluster-1-kubeconfig create -f examples/addons.yaml

# enjoy your cluster and machines

kubectl get clusters

kubectl get machines

kubectl get awsmachines

kubectl get machinedeployment

kubectl get node --kubeconfig=./aws-cluster-1-kubeconfig

With that we are running an HA’ed Kubernetes worker cluster on AWS. Our management cluster is running locally, in the near future we’ll be able to move our management cluster to a target cluster by using pivoting with clusterctl.

Clean-up and keep our planet happy

To clean-up your test environment and keep our planet happy, please run:

kubectl delete -f examples/_out/machinedeployment.yaml

kubectl delete -f examples/_out/controlplane.yaml

kubectl delete -f examples/_out/cluster.yaml

What’s coming next: Day one experience, Day 2 operations

If you’ve been familiar with earlier 2 versions of Cluster API v1alpha1 or v1alpha2 or had a look through the CAPI Book, you might have read something about the clusterctl and asked yourself why I didn’t mentioned it till now. Well, clusterctl is undertaking a redesign and is currently not available for v1alpha3.

But the good news is that clusterctl will continue to ease the init process and will be optimized for the “day one” experience of using Cluster API, and it will be possible to create a first management cluster with only two commands:

$ clusterctl init --infrastructure aws

$ clusterctl config cluster my-first-cluster | kubectl apply -f -

We’re hiring!

We are looking for engineers who love to work in Open Source communities like Kubernetes, Rancher, Docker, etc.

If you wish to work on such projects please do visit our job offerings page.

Deploy K8s using K8s with Cluster API and CAPA on AWS was originally published in Kubernauts on Medium, where people are continuing the conversation by highlighting and responding to this story.

Mehr zu Kubernetes Services, Kubernetes Training und Rancher dedicated as a Service lesen unter https://blog.kubernauts.io/deploy-k8s-using-k8s-with-cluster-api-and-capa-on-aws-107669808367?source=rss—-d831ce817894—4

Neueste Kommentare